If you want to learn how to deploy Postgres on Kubernetes distributed infrastructure, read this article. It covers Blue-green deployment using Tanzu Postgres Operator and Google Kubernetes Engine clusters. You should also back up your data. To do this, you should make use of a disaster recovery backup. After you have deployed Postgres on Kubernetes, it’s time to maintain a backup of your data. But how to use kubernetes to deploy postgres?

Blue-green deployment strategy

A Blue-green deployment strategy for Postgres on kubernete’s can help you avoid the common issues associated with this model. For example, you may want to create two clusters for each customer to make it easier to switch between the two instances. However, if you are deploying your application in a green environment, you can’t make any changes until your application has run on the green cluster.

A blue-green deployment strategy for Postgres on Kubernetes is a good fit for many situations but has challenges. For example, in the initial switch to the green environment, your users will experience an application session failure, requiring them to log back into the app. Additionally, you’ll be unable to roll back to the blue environment when you’re finished testing. If this is the case, you’ll likely want to implement a manual intervention step for each new version.

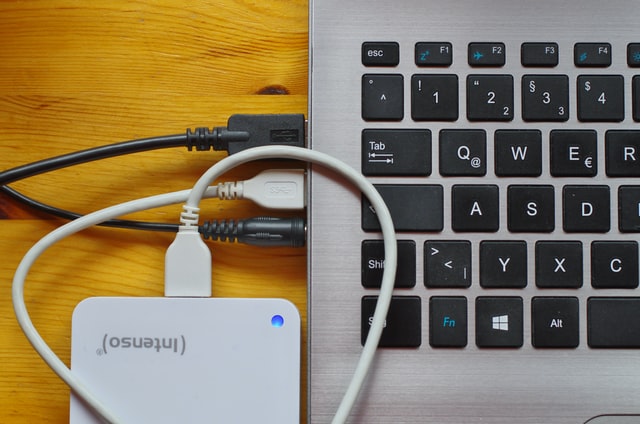

Keeping a backup of your data

When deploying Postgres on Kubernete, it’s vital to make backups of your data to prevent potential loss. The database’s base image and Postgres instance may need to be updated from time to time. It can be a damaging operation. To avoid this, create a cluster with multiple replicas. This way, you can test recovery regularly.

You can customize your backup by specifying the number of replicas or objects to back up. For example, using the pg_dumpall backup command, you can back up your data to a separate location. Alternatively, you can back up your entire namespace. Choose whichever one you prefer, and click the Backup button. After the backup completes, your cluster will be in the Pending or Progress state.

To keep a backup, ensure that you have a backup location on the host where your data is located. If you choose to back up to an external location, be sure to create a new backup site. Then, select the backup location that’s convenient for you. For example, choose a ‘private’ backup location for your database if you use a private cluster. If you use a public cluster, make sure to mark the data repository as read-only to prevent the risk of accidentally deleting your backup location.

Using Tanzu Postgres Operator

Using Tanzu Postgres Operator to deploy your Postgres cluster in Kubernetes is simple and straightforward. After you have installed the application on your Kubernetes cluster, you must create a new resource named Postgres-operator. Next, you should add a monitor and an external IP to your cluster. Finally, to ensure your deployment is fully functional, you can check if it’s running by checking its status.

You can install the Tanzu Postgres Operator using a few different ways. First, you can use a public or private registry to deploy your cluster. You should use the latter if you deploy the application on a personal or air-gapped network. If you’re using a public registry, you’ll need to configure it first. Then, once running, you can use the Postgres Operator to provision and manage Postgres clusters.

Using a Google Kubernetes Engine (GKE) cluster

If you’re looking to deploy a Postgres database to a Google Kubernetes Engine cluster, you’re in the right place. Using a simple console command, Google has made it easy to manage and deploy Postgres on Kubernetes. In this article, you’ll learn how to use a Google Kubernetes Engine (GKE) cluster.

The first step in deploying Postgres on GKE is to create a Docker image for the database. Then, use that image to install and configure your Postgres database. It is different from conventional Postgres deployment. Here are some important steps to follow. Using a Google Kubernetes Engine (GKE) cluster to deploy Postgres will save you time and trouble!

After creating the image, you must configure your default compute service account with the Kubernetes Engine Admin and Editor roles. You can do this in the IAM section of the Cloud Console. Once your account is set, you can install the Crunchy PostgresSQL operator roles. Next, for Ansible 2.4.6 or higher, you need to clone the Postgres-operator repository and confirm that you have cluster-admin privileges. Finally, you’ll need to create inventory files so the Ansible installer can configure the operator function.